背景介绍

ShuffleNet-V2:2018年发表于ECCV,是一种高效的轻量级深度学习模型,在同等复杂度下,ShuffleNet-V2比ShuffleNet和MobileNet更准确。

ShuffleNet-V2特点

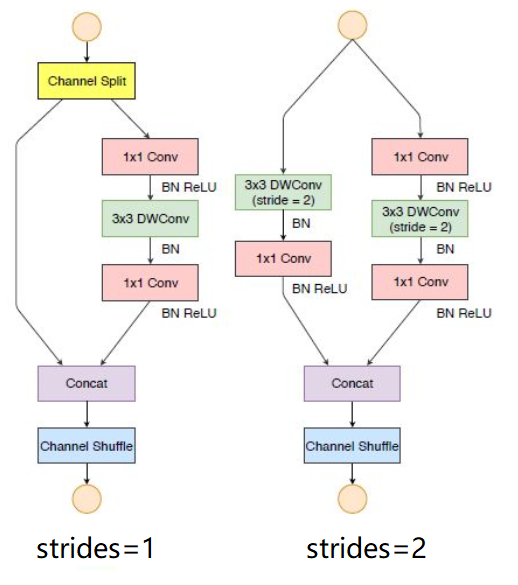

借鉴了AlexNet分组卷积的概念,引入了通道分离和通道洗牌,在减少参数量的同时,增加了通道之间的联系,并且对最后的结果进行通道合并,完成特征融合。

在Block中使用深度可分离卷积思想,减少模型参数量

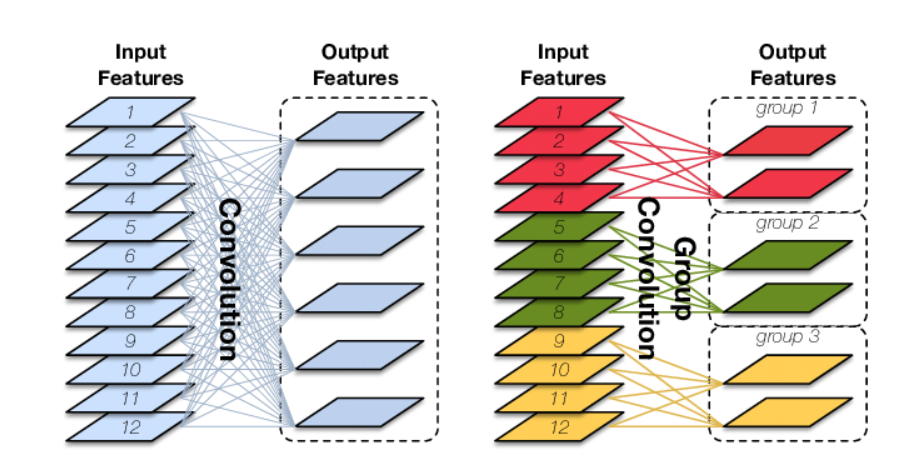

Group Convolution

Group Convolution(分组卷积):传统卷积是采用一种卷积全连接的思想,特征图中的每一个像素点都结合了图像中所有通道的信息。而分组卷积特征图像每一个像素点只利用到一部分原始图像的通道。

主要作用是大大降低网络的参数量。如果一个64x64x256的图像,经过5x5的卷积核后变为64x64x256的图像,经过普通卷积的参数量为256x(256x5x5+1)=1638656,而分成32组的分组卷积的参数量为256x(8*5x5+1)=51456,参数量缩小了约32倍,当组数变成通道数时,则类似于Depthwise Convolution深度卷积

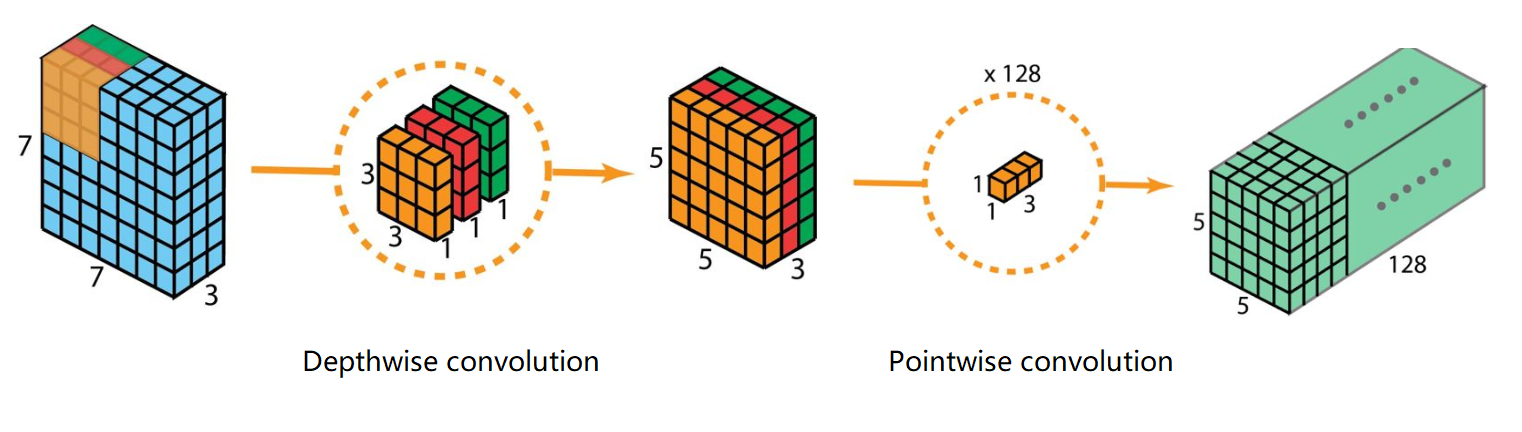

Separable Convolution

Separable Convolution(深度可分离卷积):是上面两个卷积合二为一的卷积操作。

第一步:DepthwiseConv,对每一个通道进行卷积

第二步:PointwiseConv,对第一步得到的结果进行1x1卷积,实现通道融合

主要作用是大大降低网络的参数量,并且可以调整为任意合适的通道数。第一步的目的是减少参数量,第二步是调整通道数,因此将两个卷积操作结合,组成深度可分离卷积。

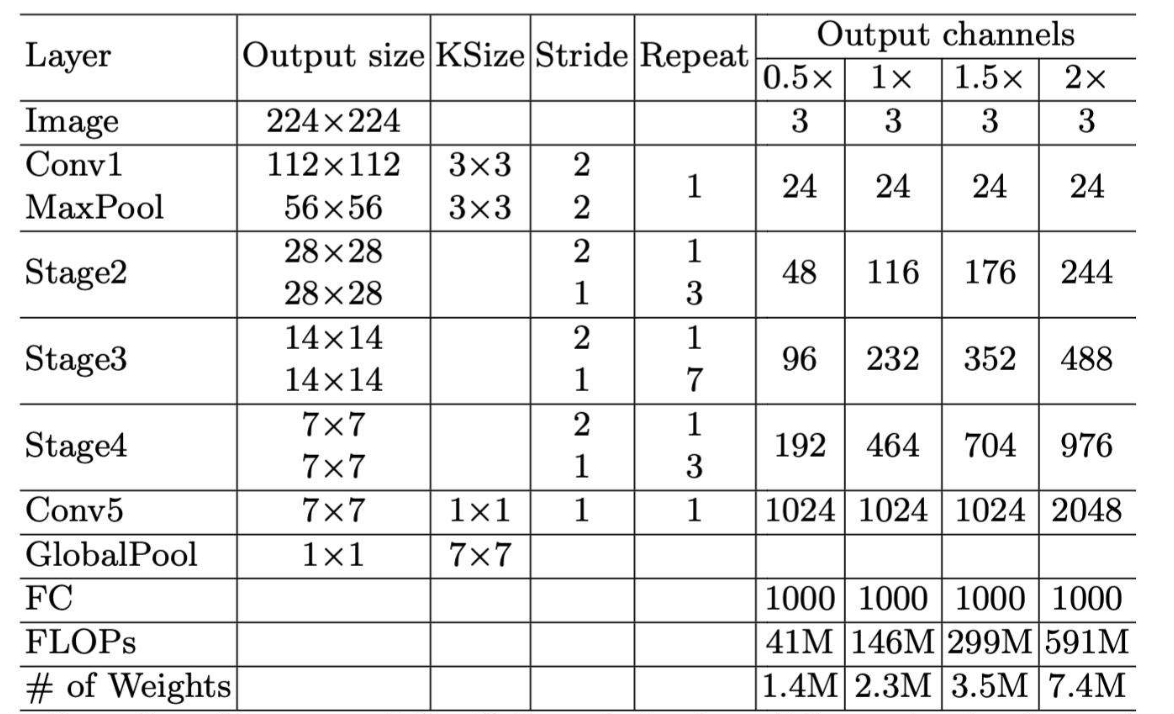

不同尺寸ShuffleNet-V2网络结构

ShuffleNet-V2图像分析

TensorFlow2.0实现

1 | from functools import reduce |

ShuffleNet-V2小结

ShuffleNet-V2是一种有效的轻量级深度学习网络,参数量只有2M,其从AlexNet中借鉴了分组卷积的思想,并且运用了通道分离和洗牌的思想,不但可以大大降低模型参数量,并且可以提高模型的鲁棒性。