背景介绍

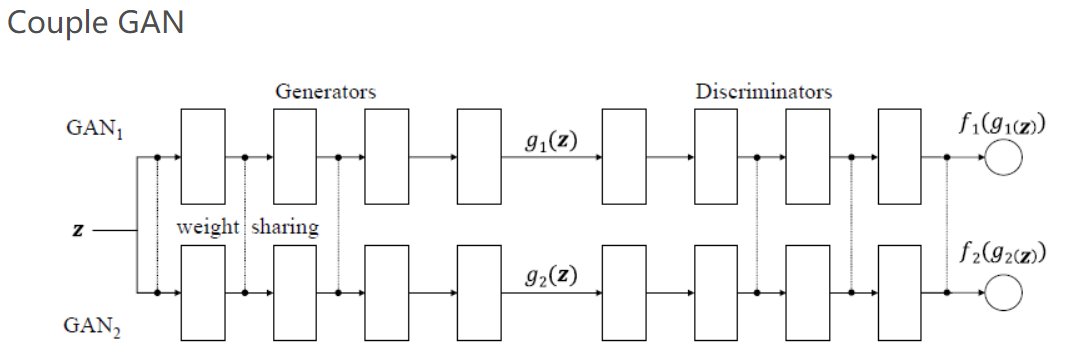

COGAN(Coupled Generative Adversarial Networks, 耦合生成式对抗网络):于2016年发表在NIPS上,只有两个以上模型才能称之为耦合,因此COGAN中存在两个生成模型和两个判别模型,而且共用某些网络层,实现耦合效果。

COGAN特点

引入两个GAN模型,并且共用某些网络层,实现少参数多功能的效果。

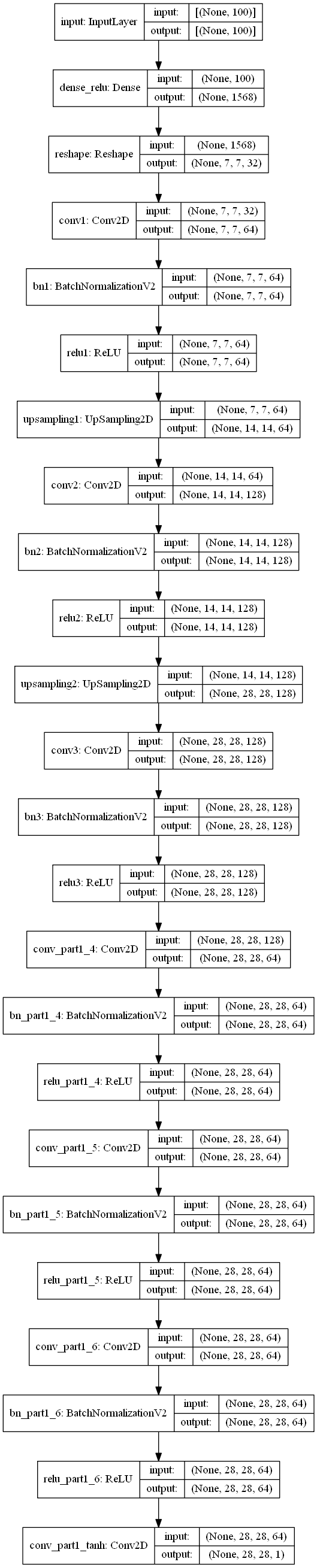

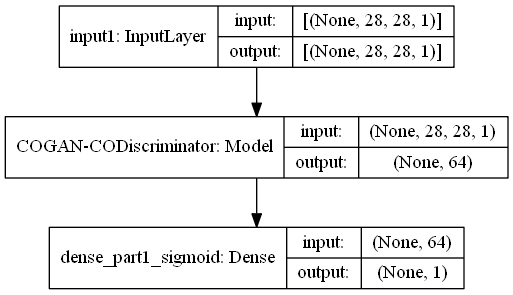

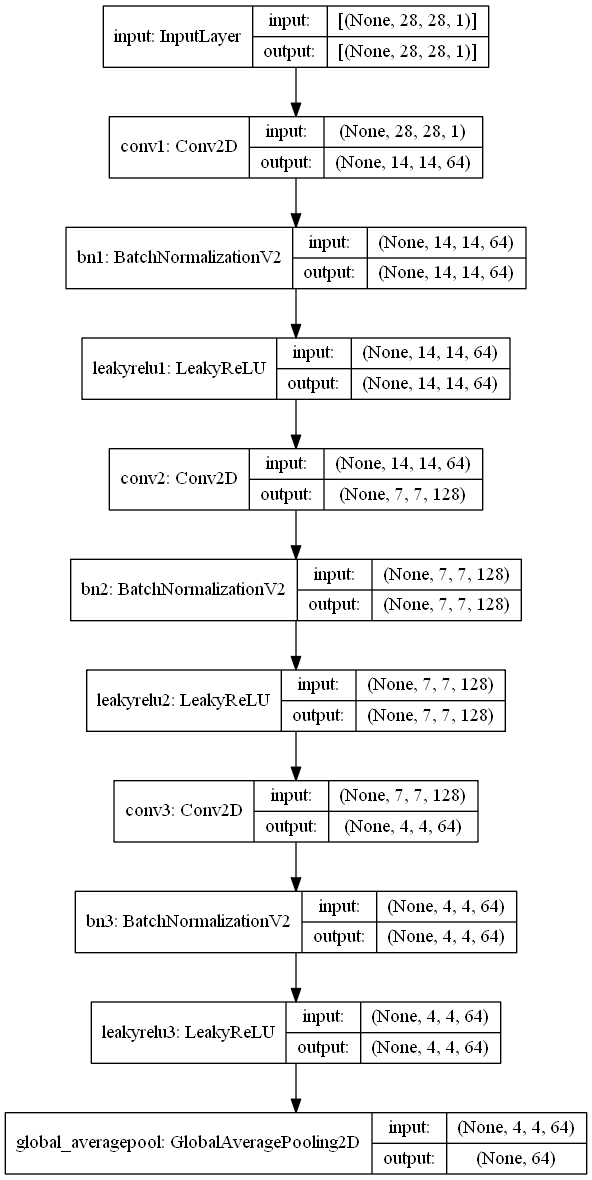

COGAN图像分析

TensorFlow2.0实现

1 | import os |

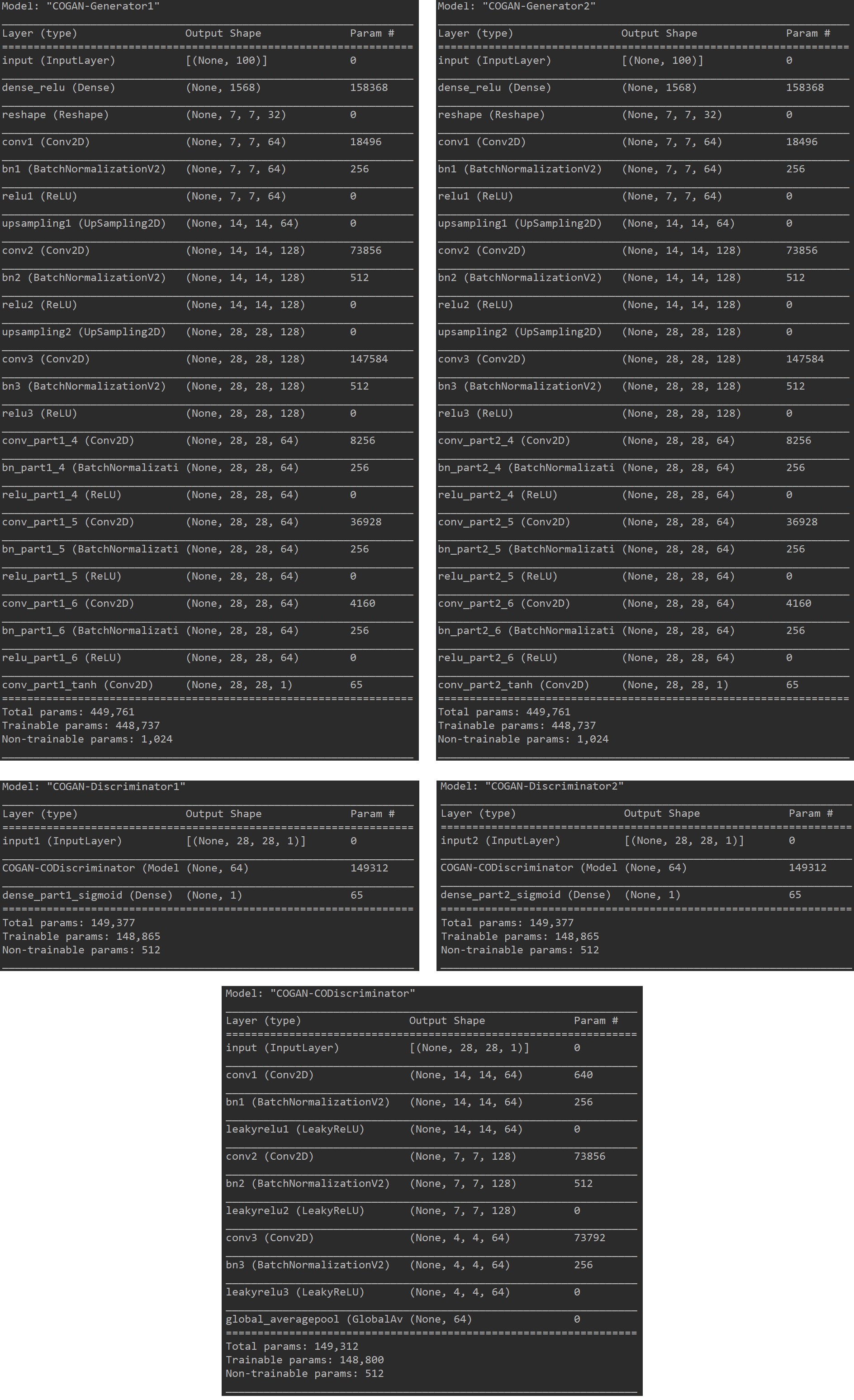

模型运行结果

小技巧

- 图像输入可以先将其归一化到0-1之间或者-1-1之间,因为网络的参数一般都比较小,所以归一化后计算方便,收敛较快。

- 注意其中的一些维度变换和numpy,tensorflow常用操作,否则在阅读代码时可能会产生一些困难。

- 可以设置一些权重的保存方式,学习率的下降方式和早停方式。

- COGAN对于网络结构,优化器参数,网络层的一些超参数都是非常敏感的,效果不好不容易发现原因,这可能需要较多的工程实践经验。

- 先创建判别器,然后进行compile,这样判别器就固定了,然后创建生成器时,不要训练判别器,需要将判别器的trainable改成False,此时不会影响之前固定的判别器,这个可以通过模型的_collection_collected_trainable_weights属性查看,如果该属性为空,则模型不训练,否则模型可以训练,compile之后,该属性固定,无论后面如何修改trainable,只要不重新compile,都不影响训练。

- COGAN中引入了两个GAN网络,其目的不是实现一种功能,虽然在这里我是实现了一种功能,其实他们共用的目的是节约特征提取网络参数,可以让一个GAN来生成某一种图像,另一个GAN来生成另一种图像,如model_g1生成手写数字,model_g2生成镜像手写数字,如果正常训练两个GAN模型,分别生成手写数字和镜像手写数字,同样的网络结构需要1.2M的参数量,而耦合之后的参数量约为0.6M,而且模型越大效果越明显。

COGAN小结

COGAN是一种有效的耦合生成式对抗网络,从上图可以看出COGAN模型的参数量只有0.6M,是一种可以同时完成多任务的网络模型,小伙伴们一定要掌握它。