背景介绍

pix2pix:于2017年发表在CVPR上,可以实现图像的风格迁移,风格迁移是GAN网络提出后才出现在人们视野里面的图像处理算法,在生成式对抗网络问世之前,人们很难通过传统的图像处理算法实现风格迁移,今天带小伙伴们看一看瞧一瞧。

pix2pix的特点

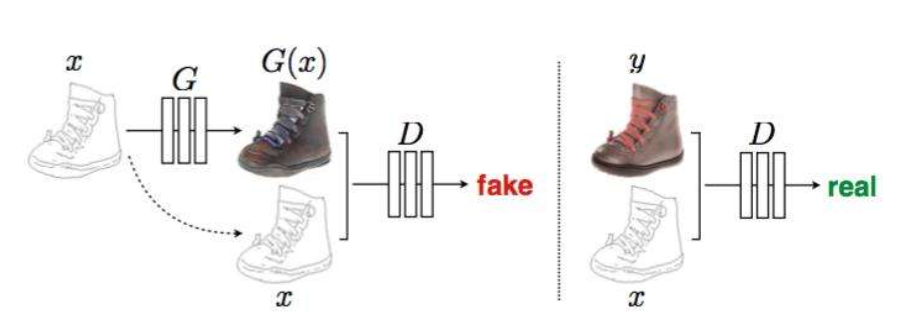

类似于半个DiscoGAN,只是从风格A转换到风格B,没有从风格B转换到风格A。

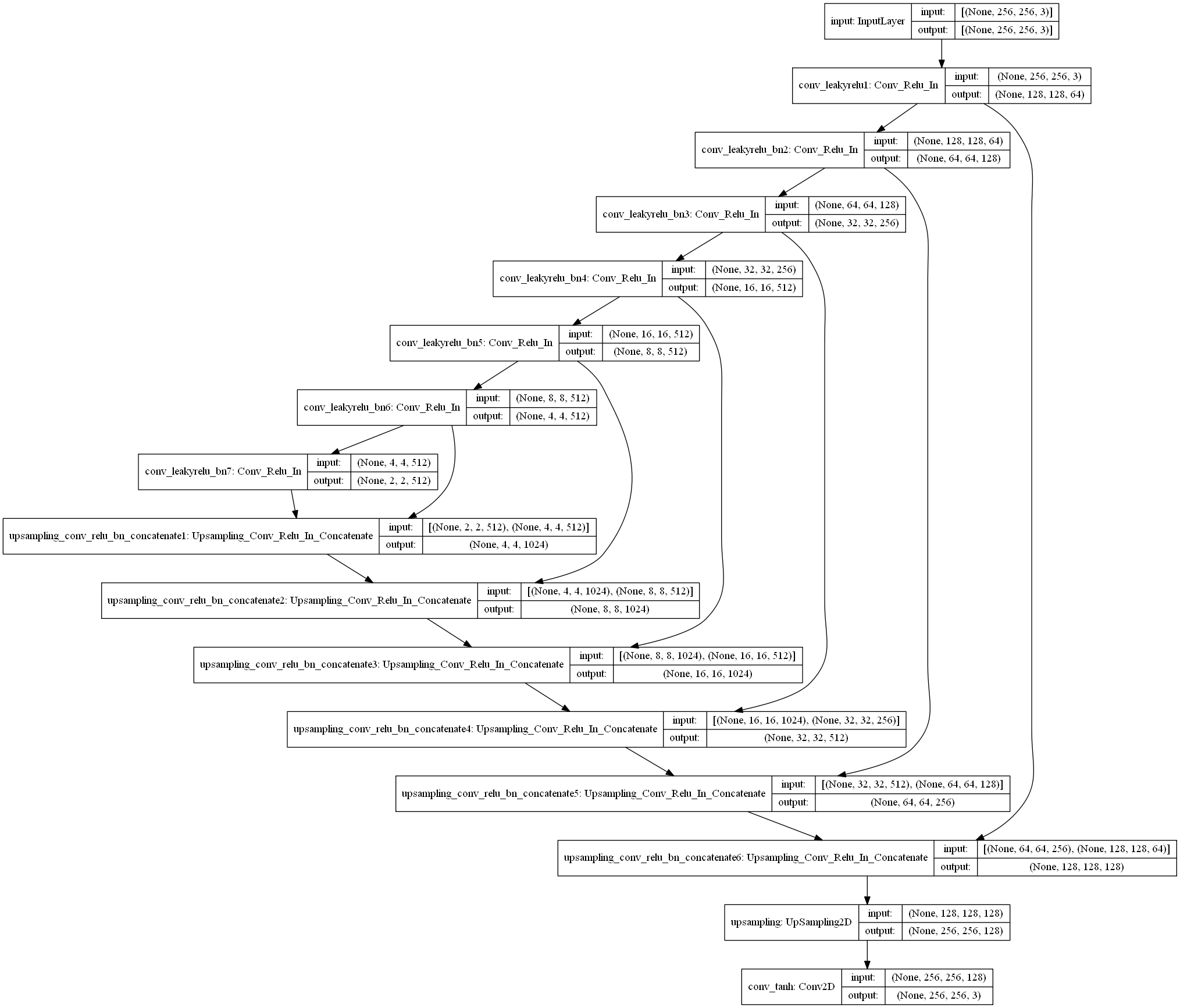

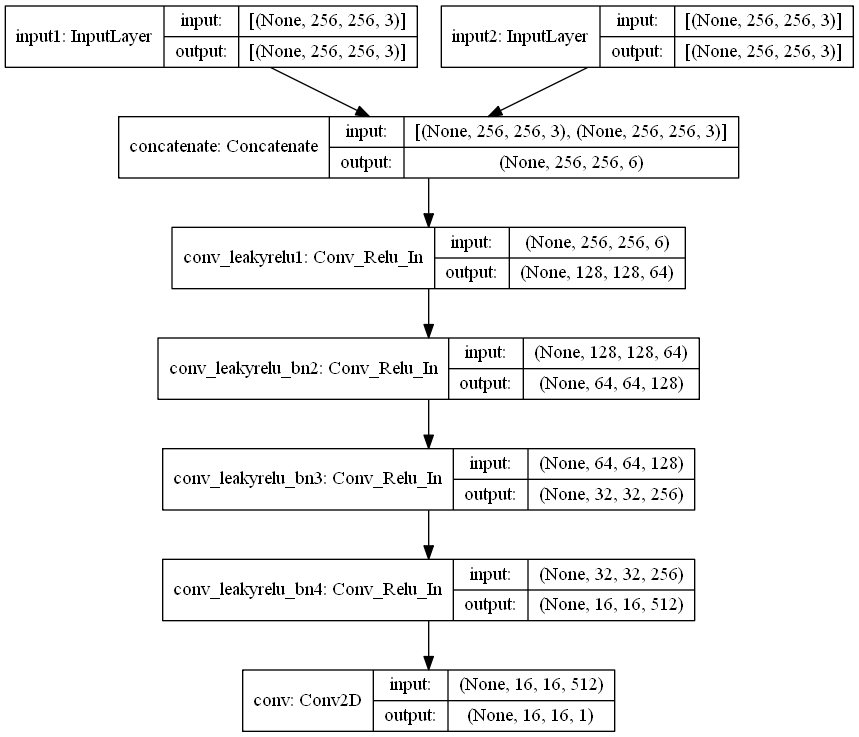

网络结构也类似于DiscoGAN,使用了UNet结构。

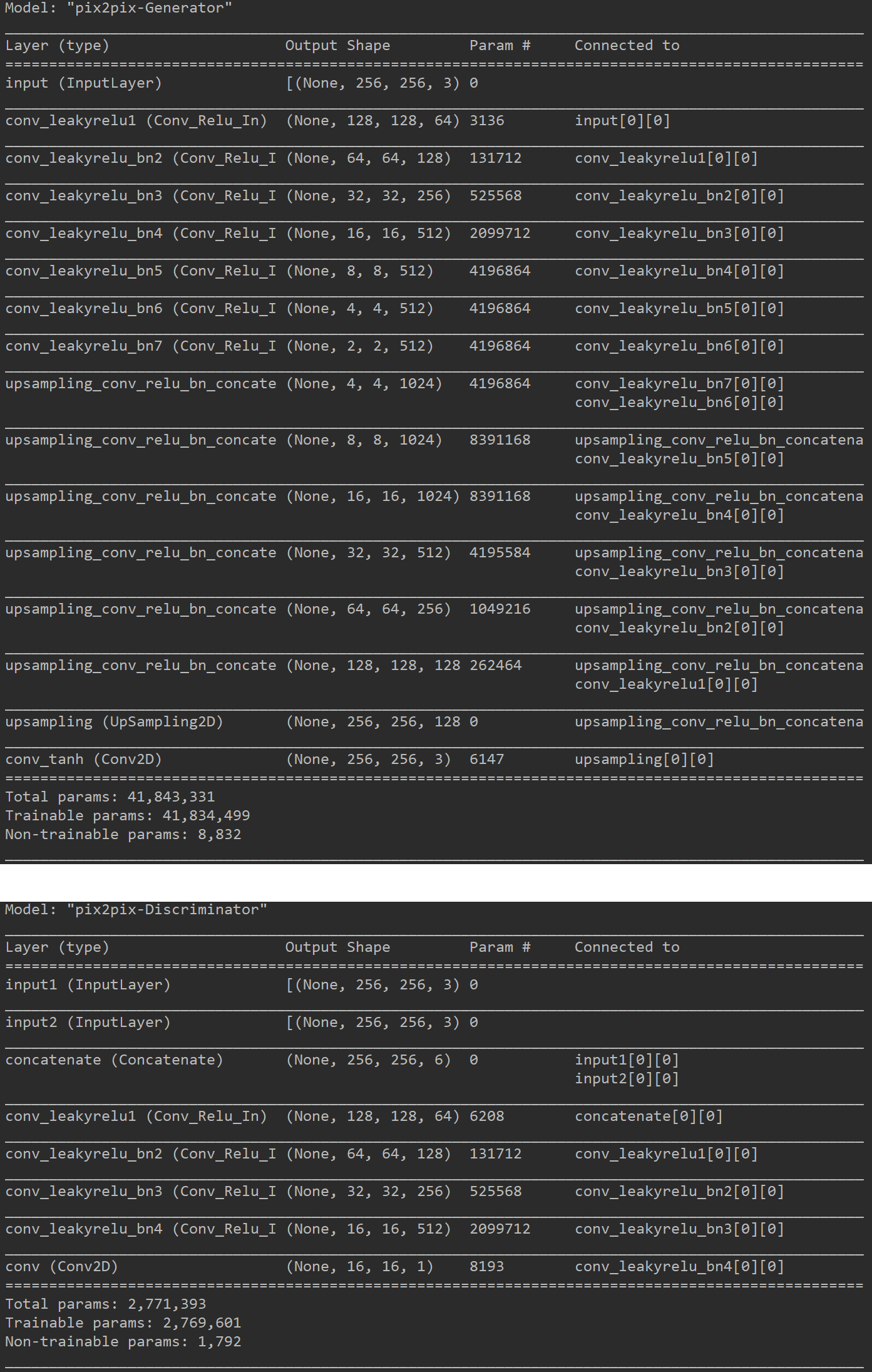

生成器损失函数采用绝对误差,判别器损失函数采用均方误差。

对生成器损失函数的权重进行调节,使网络更多关注于生成的图像质量。

pix2pix图像分析

TensorFlow2.0实现

1 | import os |

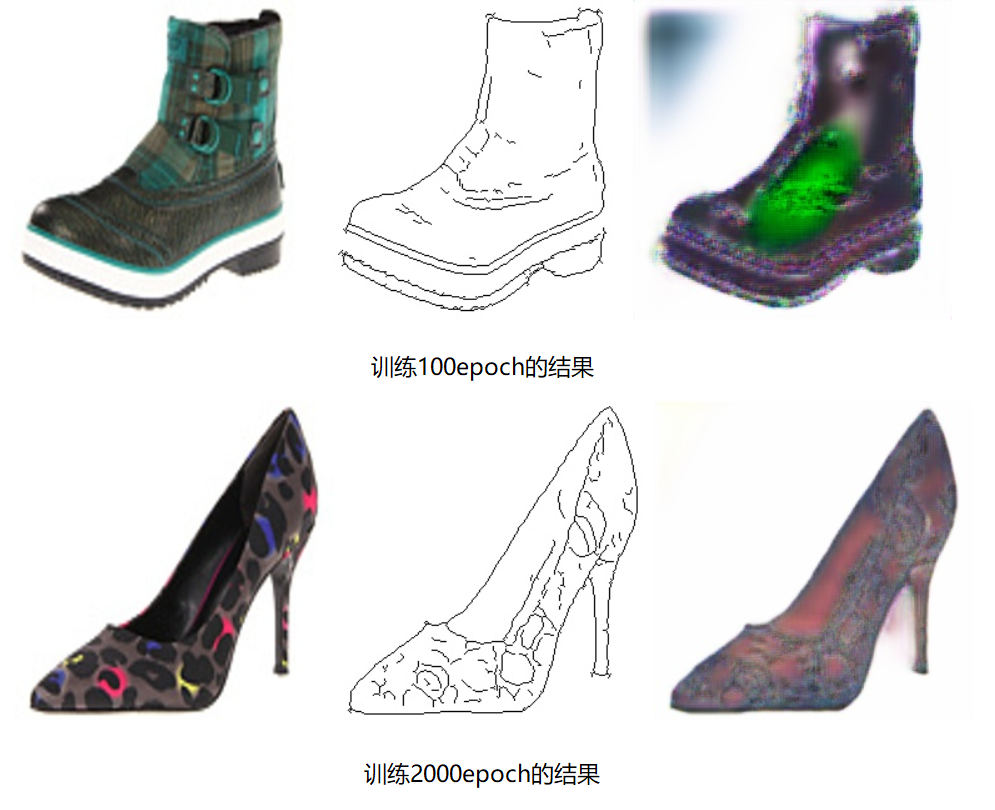

模型运行结果

小技巧

- 图像输入可以先将其归一化到0-1之间或者-1-1之间,因为网络的参数一般都比较小,所以归一化后计算方便,收敛较快。

- 注意其中的一些维度变换和numpy,tensorflow常用操作,否则在阅读代码时可能会产生一些困难。

- 可以设置一些权重的保存方式,学习率的下降方式和早停方式。

- pix2pix对于网络结构,优化器参数,网络层的一些超参数都是非常敏感的,效果不好不容易发现原因,这可能需要较多的工程实践经验。

- 先创建判别器,然后进行compile,这样判别器就固定了,然后创建生成器时,不要训练判别器,需要将判别器的trainable改成False,此时不会影响之前固定的判别器,这个可以通过模型的_collection_collected_trainable_weights属性查看,如果该属性为空,则模型不训练,否则模型可以训练,compile之后,该属性固定,无论后面如何修改trainable,只要不重新compile,都不影响训练。

- 在pix2pix的测试图像中,为了体现模型的效果,第一个图片为风格A的鞋子,第二个图片为风格B的鞋子,第三个图片为由风格B生成的风格A的鞋子,这里只是训练了2000代,而且每一代只有2个图像就可以看出pix2pix的效果。小伙伴们可以选择更大的数据集,更加快速的GPU,训练更长的时间,这样风格迁移的效果就会更加明显。

pix2pix小结

pix2pix是一种有效的风格迁移生成式对抗网络,网络结构,损失函数都和DiscoGAN几乎相同,但是DiscoGAN的生成器和判别器都是两个,可以实现AB风格的互换,而pix2pix只有一个生成器和判别器,因此只能完成风格的单向转换,因此参数量也是DiscoGAN的一半,从上图可以看出pix2pix模型的参数量只有43M,如果数据集足够,还可以生成人物表情包,是不是非常有趣呢?小伙伴们一定要掌握它。